What is the difference between AI Twin, Digital Twin, and AI Avatars?

People keep tossing around AI Twin, Digital Twin, and AI Avatars like they’re interchangeable, but no, they’re actually pretty different. It is important to understand the difference between them, as mixing them can waste resources. Marketers chasing personal branding might pick generic avatars that feel inauthentic and fail to convert, or invest in industrial digital twins expecting human-like spokespeople.

In this blog, we do a real breakdown between them and explore them in detail, and get a deeper understanding. So read it till the end because this blog is all you need to make your future marketing plans yield better results.

What Is An AI Twin?

Think of an AI twin as your digital double built specifically to act, talk, and even decide stuff pretty much as you would. It’s not some generic chatbot or cartoon character. Instead, companies and creators train these things with their own material: hours of video where you ramble about ideas, voice clips, old emails, social posts, maybe even how you gesture when you’re excited or annoyed.

The result? A version of you that can crank out videos, reply to comments, sit in on virtual meetings, or answer questions in your voice and style without you having to show up every single time. In 2026, tools are popping up at places like CES where execs get “MyPersonas” clones of key employees so knowledge stays available 24/7, even in multiple languages.

How AI Twin Works?

Alright, here’s the straightforward scoop on how an AI Twin actually comes together in 2026, nothing too fancy, just what people are really doing right now.

You kick things off by giving the platform your raw material. Most tools like Tagshop AI, Synthesia, and more make it easy for you make an AI twin. We’re talking 2 to 5 minutes of you just chatting to the camera: good lighting, face clear, no weird background noise, speak like you normally do. Some push for more extra clips, voice samples, old posts, emails, or even notes about your vibe and habits, so it captures the full picture.

Upload all that, and the system goes to work. It scans your face for every little expression and head tilt, clones your voice down to the accent, pauses, energy level, and those random ums ” m”s you drop.

Processing usually takes a few hours up to a full day. From there, feed it a script, question, or prompt, and it spits out a video where it lip-syncs perfectly, adds natural gestures, or just types out replies in your writing style. Want ongoing tweaks? Keep feeding new recordings or interactions, and it slowly gets even better at being “you.”

Read More: How to create AI videos using your AI twin?

What is a Digital Twin?

Okay, let’s talk about what a Digital Twin actually is.

It’s a live virtual copy of something real and physical. It could be one machine, a full production line, a wind turbine out in a field, a bridge, or even parts of a city. The key thing is it doesn’t just sit there like a fancy 3D drawing. This twin stays linked up and updates constantly to show exactly what’s going on with the real version at any moment.

Now in 2026, it’s everywhere in big industries. Companies build these twins to keep an eye on how stuff performs right now, run tests on changes without any risk, spot when something might break soon, or find ways to make operations cheaper and smoother. For example, Singapore has created one of the world’s most advanced digital twins of an entire city. This 3D model includes every building, road, and even individual trees.

It’s all focused on physical things, systems, or processes, not people. In factories, energy plants, and construction sites, it’s saving serious money by fixing problems before they happen or tweaking designs early. Think of it as a super-reliable mirror that helps avoid expensive surprises.

How Digital Twin Works?

Here’s the step-by-step on how a Digital Twin actually runs day to day.

You put sensors all over the real object. Temperature gauges, vibration detectors, pressure sensors, motion trackers, whatever makes sense for that thing. These IoT devices keep sending fresh data every second or minute to a main software platform. Big names like Siemens, IBM, or AWS handle a lot of these setups.

The software starts with a base model. Often pulled from original design files, CAD drawings, physics rules, or past performance records. As live data comes in, the twin refreshes itself instantly. Does the real engine get hotter? The digital one shows it too. Conveyor slows down? Same here.

Next, the smart part happens. AI and simulation tools let you play around. Crank up speed 15 percent in the twin and see what breaks or improves. Notice odd vibrations? It warns you to check that part before it fails. It’s not one-way. Good ideas from the twin go back to the real world: adjust settings, schedule repairs, change designs.

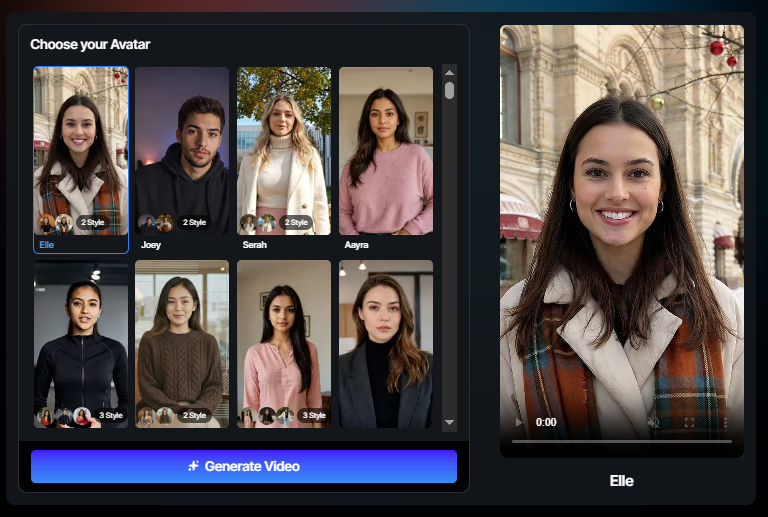

What are AI Avatars?

Okay, moving on to what AI Avatars actually mean in practice.

These are basically digital characters run by AI that look like people and can talk, move, show expressions, and handle conversations or presentations. Some look super realistic, almost like a real human on video, while others go more stylized or cartoonish, depending on what you need.

The big thing is they act as stand-ins for communication. You see them a lot in marketing videos, customer support chats, online training sessions, ads, or even interactive apps. Platforms like Synthesia, HeyGen, D-ID, or Colossyan make it easy to create them. Pick one, add a script, and it speaks with natural lip movements, gestures, and tone.

Unlike an AI Twin that tries to copy one exact person’s whole personality and habits long-term, AI Avatars are usually more general or lightly customized. They’re tools for getting content out fast without filming every time, translating to different languages, or keeping a consistent “face” for a brand. In 2026, they’re everywhere because they’re cheap, quick, and make digital stuff feel more personal and engaging without needing a camera crew.

How do AI Avatars work?

Most platforms keep it pretty straightforward. You start by choosing from a library of ready-made avatars (tons of options on Tagshop AI or Synthesia) or making a custom one. For custom, you upload a short video clip (usually 2-5 minutes of someone talking naturally) or even just photos plus audio samples. The system scans everything: face structure, how the mouth moves, expressions, voice pitch, accent, and little pauses.

It combines a few key pieces of tech. Text-to-speech turns your written script into spoken words with a realistic tone and emotion. Facial animation syncs the lips perfectly to the audio, adds eye blinks, head tilts, smiles, or frowns. Gesture engines throw in natural hand movements or body language, so it doesn’t look stiff. If it’s interactive (like live chat support), it hooks up to a language model so the avatar can respond to questions in real time, pulling answers and adjusting on the fly.

Read More: Best practices for using realistic AI avatars in videos.

AI Twin vs Digital Twin vs AI Avatars: What’s the difference?

These three concepts often get lumped together in discussions about virtual representations, especially with rapid advancements in 2026. However, they serve distinct roles based on their targets, data inputs, and practical applications. The table below provides a clear, side-by-side view.

| Aspect | AI Twin | Digital Twin | AI Avatar |

| Primary Focus | Replicates one specific individual person (voice, mannerisms, knowledge, style) | Mirrors a physical object, system, or process (machine, factory, building, infrastructure) | Creates a digital character for interaction (realistic or stylized human figure) |

| Data Source | Personal uploads: videos, audio, texts, emails, behavioral patterns from the individual | Real-time sensor/IoT data: temperature, vibration, pressure, usage metrics from the physical asset | Scripts, prompts, stock model,s or short custom clips; animation and voice libraries |

| Core Purpose | Extends personal presence: generates content, handles meetings, replies in your place to scale output | Monitors and optimizes assets: predicts failures, simulates changes, reduces downtime, and costs | Delivers engaging visuals/communication: videos, ads, support chats, training without full filming |

| Adaptability Level | Evolves with new personal data and interactions to improve mimicry over time | Continuously updates via a live sensor feedback loop for accurate real-time simulation | Primarily script/prompt-driven; some real-time responses, but limited deep evolution |

| Typical Applications | Content creators, executives, consultants, and educators need to multiply their reach | Manufacturing, aerospace, energy, construction, and healthcare for predictive maintenance and efficiency | Marketing, customer service, e-learning, and retail for quick, scalable human-like engagement |

| Key Limitation | Requires extensive personal data; quality depends on input depth | Needs strong sensor integration; focused only on physical entities | Less personalized depth; more surface-level for broad use |

Conclusion

These three technologies keep evolving fast in 2026, and the lines sometimes blur in marketing pitches or casual talk. But the core stays clear: an AI Twin clones a real person to extend their reach and handle personal tasks without constant effort. A Digital Twin mirrors physical assets or systems to monitor, predict issues, and optimize performance in real operations.

An AI Avatar creates flexible digital figures for quick, engaging communication in videos, support, or content. AI Twins help individuals scale themselves, Digital Twins drive efficiency in heavy industries, and AI Avatars make digital interactions feel more natural and affordable. As tools improve, hybrids might pop up more, but understanding the real differences now saves time and gets better results. The future looks virtual, and these are the main ways we’re getting there.

Frequently Asked Questions

No, they’re quite different. An AI Twin copies one specific person for personal or creative work, like videos and chats. A Digital Twin replicates physical objects or systems, like machines or buildings, for monitoring and improvement using sensor data.

They’re more than basic cartoons now. Many look realistic, speak naturally, gesture, and respond in real time for things like customer help, training videos, or ads. They add a human touch to digital content without being a full personal clone.

AI Twins rely the most on real human data, your videos, voice recordings, writing samples, and habits. AI Avatars might use some short clips for custom looks, but often start generic. Digital Twins pull from sensors on physical things, not personal human info.

Yes, a lot of them do. They take in new interactions, fresh recordings, or feedback to get closer to matching your style, voice, and decisions as time goes on.

Manufacturing, aerospace, automotive, energy, construction, healthcare, and urban planning use them heavily. They’re great for predictive maintenance, testing designs, and running operations more smoothly.

AI Twins focus on replicating a person for communication, content, or tasks. Digital Twins mirror physical assets or processes for analysis, prediction, and optimization in industrial settings.

AI Twins build deep, evolving copies of one person that adapt over time. AI Avatars are more general or lightly customized digital figures for visuals, scripts, and interactions without that full personal depth.

Content creation, media, education, consulting, executive roles, and any area where scaling one person’s expertise or presence matters most.

They handle routine content, ads, or high-volume posts well and save time, but they lack the raw authenticity, spontaneity, and personal connection humans bring. Most experts say it’s more about teaming up than full replacement AI augments; humans keep the real spark.

It depends on the goal. AI Twins work great for deep, authentic brand representation, like a true ambassador clone that evolves. AI Avatars shine for fast, cost-effective visuals in ads, support, or general messaging where broad appeal matters more than exact personal match.

If you need something that truly captures your unique voice, style, and decisions, and keeps improving with new data, an AI Twin gives far more depth and authenticity. Avatars are solid for quick, scripted tasks,s but don’t match that long-term personal feel.