Introducing AI Twin, Advanced Voice Models, and New Video Generation Capabilities

At Tagshop AI, we’re constantly innovating to make your AI video creation experience more realistic, expressive, and effortless.

Our latest product update brings a major leap forward with three powerful enhancements – AI Twin, Multiple Voice Models (V2 & V3), and new video generation models that redefine what’s possible in AI-generated content.

Let’s dive into what’s new and how these updates will help you create smarter, more human-like AI videos.

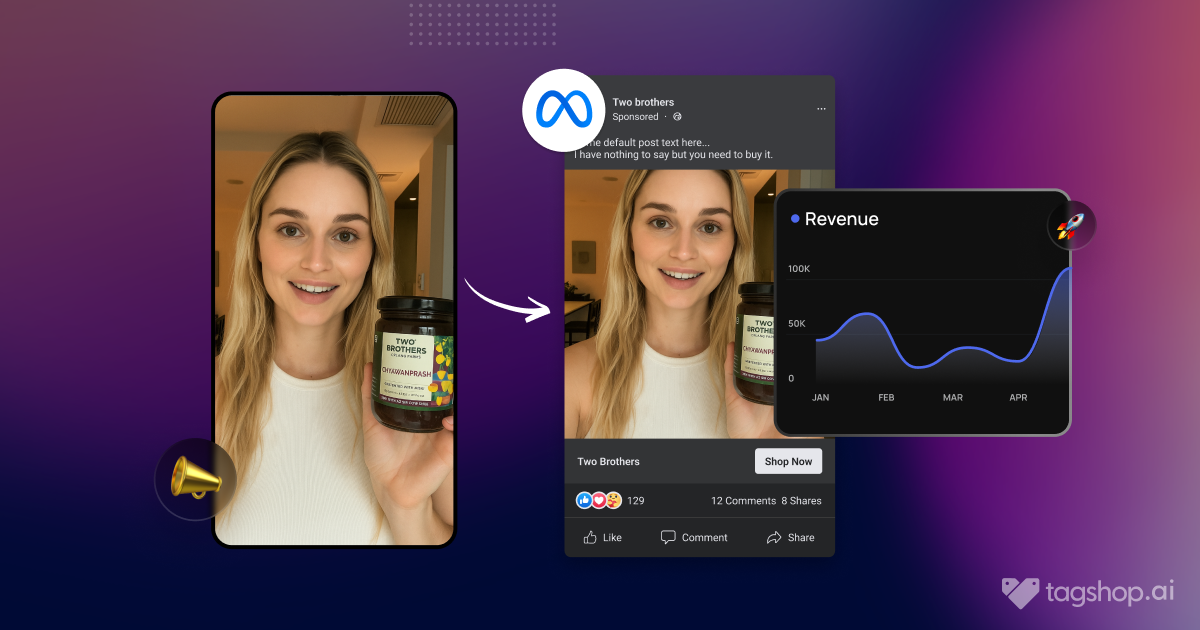

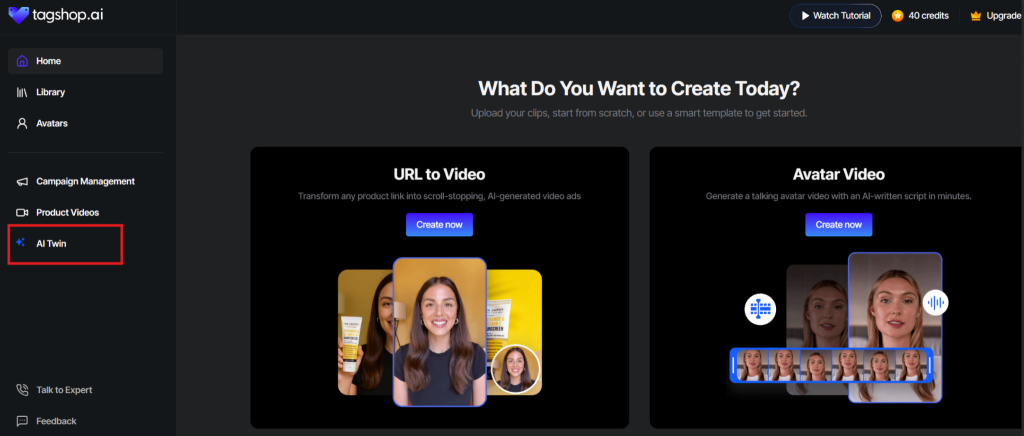

1. Create Your Digital Self with AI Twin

Imagine having your own digital version that looks, speaks, and acts like you – ready to appear in any video, anytime. That’s what the new AI Twin feature makes possible.

With AI Twin, you can:

- Clone your appearance and voice to generate hyper-realistic videos without recording every time.

- Scale your personal brand or business content while maintaining authenticity and personality.

- Save time and effort, especially for creators, marketers, and brands who need frequent video content.

Whether you’re running ad campaigns, customer onboarding videos, or social media explainers – your AI Twin ensures consistency and creativity at scale.

2. Next-Level Realism with Multiple Voice Models (V2 & V3)

Voice plays a huge role in making your videos believable and emotionally engaging. Our latest update introduces enhanced voice models with new customization capabilities:

🔹 Voice Speed & Stability

Now you can control how your AI voice sounds – from slow and calm to fast and energetic. Adjusting the speed and stability helps your message fit the exact tone you want.

🔹 Voice Emotions (V3)

Available in Voice Model V3, this feature adds emotional depth to your videos. Choose tones like happy, confident, empathetic, or serious to align perfectly with your content’s purpose.

🔹 Pronunciation Correction

Get perfect pronunciation for brand names, product terms, or unique words. With pronunciation correction, your voiceover now sounds natural and precise – just like a professional speaker.

Together, these voice upgrades bring your AI avatars to life with more personality, clarity, and authenticity.

3. More Realism with New Video Generation Models

We’re also expanding the creative potential of product videos with three new-generation models that push visual realism to the next level.

🔸 Sora 2.0

Designed for cinematic storytelling, Sora 2.0 delivers ultra-smooth motion and enhanced realism. Perfect for product video ads, showcases, and lifestyle visuals that need a professional touch.

🔸 WAN 2.2

A performance-focused model ideal for product demo videos and UGC-style videos. Expect sharper details, more dynamic backgrounds, and fluid transitions that look studio-made.

🔸 InfiniteTalk (for Talking Videos)

Exclusive to talking videos, InfiniteTalk lets you create realistic face-to-camera videos up to 60 seconds long – with 30 seconds available for free users.

Enjoy natural lip-sync, expressive facial movements, and seamless speech generation for authentic-looking talking avatars.

Why These Updates Matter

These features aren’t just technical upgrades — they’re designed to help you create videos that connect better with your audience.

- More personal: Your AI Twin ensures every video reflects your unique style.

- More emotional: Voice Emotions and pronunciation control make your delivery believable.

- More dynamic: The new models enhance video realism, making your content scroll-stopping and ad-ready.

In short, your videos will now look real, sound natural, and feel human.